This post is one of many where I write about everything I’m learning about the AWS cloud. The last article was on AWS’ managed relational database service. In this post, I’ll discuss Amazon S3, the AWS object storage service.

Navigation

2. EC2 Storage

4. Auto Scaling

5. AWS RDS

6. Amazon S3 (This article)

Amazon S3

Amazon Simple Storage Service (Amazon S3) is an object storage service that is one of the main building blocks of the AWS Cloud. Amazon S3 can store, organise and share data for various business purposes. It can be used in the following applications:

- Data backup and storage

- Disaster Recovery

- Archiving

- Hybrid Cloud Storage

- Application Hosting

- Media hosting

- Data lakes & big data analytics

- Software updates delivery

- Static websites

How it works

Buckets

Data in S3 is stored as objects in buckets. Objects are the fundamental entities stored in a bucket. Buckets are like folders and must have a globally unique name across regions and accounts. Buckets are created at the region level, and bucket names should contain numbers, lowercase letters and hyphens only.

Objects

Files are called objects. Each object has data, a key (unique within a bucket), and metadata such as when the object was last modified and its content type. Objects can be files, images, videos, or any other data type. The key is a full path to the object and looks something like this s3://my-bucket/my_file.txt. A key is made up of a prefix and an object name. The maximum object size you can upload is 5GB. You can use the multi-part upload feature to upload a file bigger than 5GB. Each object you upload to S3 has a URL that can be made public.

Security in Amazon S3

Buckets and objects can be protected in three ways:

Encryption: AWS does server-side encryption automatically before saving your data to disk and decrypts it when you download it. You can also encrypt data yourself on the client side before uploading it.

User-Based Policies: IAM Policies. These policies stipulate what API calls to S3 should be allowed for a specific user.

Resource-Based Policies: These policies can be attached to a resource such as an S3 bucket. With resource-based policies, you can specify who has access to a resource and what actions they can perform. You can create ACLs(Access Control Lists) for Buckets and Objects using these policies.

An IAM Principal (user, user account or application) can only access an S3 object if two conditions are true:

- The user’s IAM permissions or a resource policy allows the action

- There is no explicit DENY rule for the user.

Below is an example of a Bucket Policy.

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicRead",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::examplebucket/*"

]

}

]

Resource: buckets and objects you want the policy to apply to

Effect: Allow/Deny

Action: Set of API actions to ALLOW or DENY

Principal: Who you’re allowing to access the bucket

The example above allows anyone to read the contents of the examplebucket.

Bucket Policies can be used to add all sorts of rules to a bucket or the objects in it; for example, you can add a rule to make a file publicly available or to force encryption on upload.

Granting Access to Buckets

Access to buckets can be granted in the following ways:

1) Use IAM permissions to allow access to the bucket

2) Use Bucket policies to allow IAM user access to the bucket

3) To allow EC2 instance access to an S3 bucket, use IAM Roles.

4) To allow cross-account access, create a Bucket Policy.

Amazon S3 Versioning

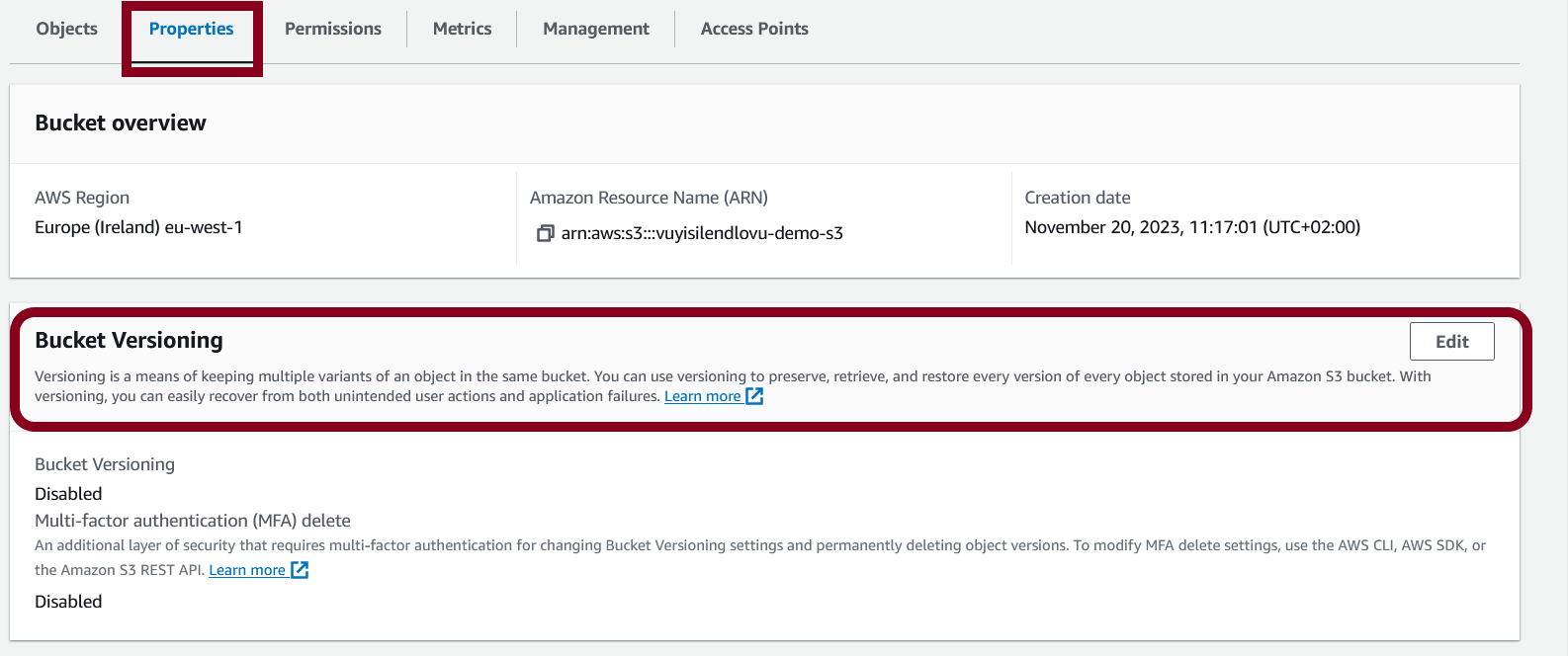

Versioning in S3 allows you to keep multiple variants or versions of a file in the same S3 bucket. When versioning is enabled, you can roll back, recover or restore files easily when unintended failures or application crashes occur. Enabling versioning can help you recover files from accidental deletion and overwrites. The image below shows how to enable versioning in the AWS Console:

Amazon S3 Replication

Replication enables automatic, asynchronous copying of objects from one bucket to another. Replication can be done for buckets owned by the same AWS account or by different accounts. The source and destination buckets can be in the same region or in different regions.

Cross-region replication use cases: compliance, lower latency access and replication across accounts.

Same-region replication use cases: log aggregation and live replication between production and test environments.

Only new objects are replicated after enabling replication. To replicate existing objects, use S3 Batch replication.. Batch Replication replicates existing objects and objects that failed replication.

When replication is enabled and an object is deleted, AWS adds a delete marker to the object to prevent accidental deletions. If delete marker replication is enabled, these markers are replicated or copied over to the destination buckets. In both instances, AWS behaves as if the object has been deleted. Think of a delete marker as putting something in the recycle bin on Windows. It is out of sight but not gone. Delete markers are not replicated by default; they can be enabled in the “Replication Rules” in the S3 Management settings.

Steps to Enable Replication

- Create source and destination buckets

- Enable versioning on each bucket

- Create or choose an IAM Role for the versioning.

- Specify which objects to version

- Enable replication

When you enable replication, any files you add or modify in Bucket A will be copied over and modified in Bucket B as well.

Conclusion

This article overviewed what Amazon S3 is and discussed its features such as security, versioning and replication. The next article will be about the different storage classes in S3.