Introduction

Downtime in a database system can occur due to various reasons such as hardware failures, power outages, software issues or maintenance activities. Downtime translates directly to revenue loss and customer dissatisfaction. Because of this, It is important to build database systems with High Availability.

High Availability in the context of database systems refers to the capability of a system to minimise or eliminate downtime in order to remain accessible and operational without interruptions, ensuring continuous service delivery to users and applications. In this blog post I’ll go over different strategies to achieve High Availability and focus specifically on replication with PostgreSQL and Docker.

High Availability Solutions

To build highly available database systems, database administrators have to implement one or more of the solutions listed below:

Standby Replication

Standby replication involves creating a replica or standby server that mirrors the primary PostgreSQL database. This replica is kept up to date with changes from the primary server, providing a failover option in case the primary server becomes unavailable. In the event of a failure, the standby server can be promoted into a primary.

Replication can be done in one of several ways including, log shipping, streaming replication, cascading replication and logical replication. PostgreSQL stores a transactional log that stores changes made to the database in a file called a Write Ahead Log (WAL). In PostgreSQL, the primary server generates WAL records as transactions are saved to the database. The replica or standby servers use these WAL records to synchronise their state with the primary server.

Log Shipping Replication

- Log shipping is a replication method where the transaction log files from the primary server are periodically shipped to a standby server.

- Log shipping has minimal performance impact on the primary server but there is some delay in synchronisation between the primary and the replica because the primary doesn’t ship its logs until the log file is filled up to a certain point.

- A standby server is generally not available for access but can be configured as a hot standby. In this mode log files are sent over as soon as they are available. In hot standby, the standby server can be used for read only queries.

- Log shipping is an old school way of doing replication. Newer ways of doing the same thing are streaming and logical replication.

Streaming Replication

This type of replication streams changes from the primary to the standby instances continuously and results in near real-time synchronisation. The changes are replicated for the entire database; you cannot filter out specific tables. The configuration of the primary and standby servers must be the same.

- Fast and safe method of replication.

- Asynchronous, minimal lag, easy to change between async and sync replication.

- Failover possible. Secondary can take over if primary fails.

- Read only queries can be distributed on the secondary.

- Short lag time that depends on db load, network connection, streaming settings.

- Primary server doesn’t wait for confirmation that secondary has committed the results.

Cascading Replication

Cascading replication is a form of streaming replication where a standby server replicates from another standby server, rather than directly from the primary, meaning replication changes “cascade” down through a hierarchy of servers.

The cascading replication feature allows a standby server to accept replication connections and stream WAL records to other standbys, acting as a relay. This can be used to reduce the number of direct connections to the primary and also to minimize inter-site bandwidth overheads.

A standby acting as both a receiver and a sender is known as a cascading standby. Standbys that are more directly connected to the primary are known as upstream servers, while those standby servers further away are downstream servers. Cascading replication does not place limits on the number or arrangement of downstream servers, though each standby connects to only one upstream server which eventually links to a single primary server.

A cascading standby sends not only WAL records received from the primary but also those restored from the archive. So even if the replication connection in some upstream connection is terminated, streaming replication continues downstream for as long as new WAL records are available.

Logical Replication

Logical replication also works with the WAL records of the primary instance. But, instead of sending the changes as-is in the binary format, the WAL records are decoded into logical changes(SQL statements) that are then replicated to the standbys. You can pick which tables to replicate, and the primary and standbys configuration doesn’t need to be the same. You can use tools like pglogical to enable logical replication in PostgreSQL.

FailOver

Failover is the process of automatically or manually switching to a standby server when the primary server becomes unavailable.

Manual Failover

In manual failover scenarios, administrators can use the pg_ctl command to promote a standby server to the primary role. This is a controlled process often performed during planned maintenance or when responding to detected issues. I’ll demonstrate how to promote a standby server to a primary in the next section.

Automatic Failover Using Open Source Tools

PostgreSQL does not support automatic failover out of the box, but several open-source tools can automate the failover process. Tools like Patroni, Repmgr, PAF and PgPool-II monitor the health of the primary server and orchestrate failover when needed.

Failback:

Failback involves restoring the original primary server into rotation after it has been repaired or brought back online. This process ensures a return to the normal operational state.

Ensuring Data Integrity During Failover:

- Manual Failover: Administrators can use the

pg_rewindcommand or use open-source tools like Patroni, Repmgr, or PAF to perform a controlled re-synchronization of the primary server after recovery. - Automatic Failover Tools: These tools typically handle the failback process automatically, ensuring that changes made to the secondary server while the primary was unavailable are not lost during the failover and failback operations.

Questions to guide your decision making process when implementing High Availability

The right High Availability solution for you depends on your specific needs. I asked ChatGPT to generate a list of questions worth asking when making a decision about which replication and failover option to go with. These are the questions it gave me:

- Business Requirements:

- What is the acceptable downtime for your application?

- How critical is data consistency during failover or maintenance?

- What are the potential financial implications of downtime?

- Database Architecture:

- Are you using a single-node or multi-node architecture for your PostgreSQL deployment?

- What is the current load and growth projection for your database?

- Do you have read and write separation requirements?

- Replication Considerations:

- Do you prefer physical or logical replication?

- What level of data consistency and synchronization do you require between nodes?

- Is synchronous replication necessary, and if so, how will it impact performance?

- Failover and Recovery:

- How quickly do you need to recover from a database failure?

- Is automatic failover a critical requirement, or is manual failover acceptable?

- What is the strategy for promoting a standby server to a primary role?

- Operational Aspects:

- What is the expertise level of your operations team in managing PostgreSQL?

- Are there specific compliance or security requirements that influence your HA strategy?

- Do you need to perform maintenance activities without disrupting the service?

- Scalability and Performance:

- How will the chosen HA approach impact the scalability of your PostgreSQL deployment?

- What is the expected performance overhead of the chosen replication method?

- Infrastructure and Environment:

- Are you deploying PostgreSQL in a cloud environment, on-premises, or in a hybrid setup?

- What networking infrastructure and security measures are in place?

- Is there a need for geographic distribution or multi-region deployment?

- Monitoring and Maintenance:

- What tools and processes will you use for monitoring the health of your PostgreSQL instances?

- How will you handle software upgrades and routine maintenance without causing downtime?

- Cost Considerations:

- What is the budget for implementing high availability in your PostgreSQL deployment?

- Are there cost differences between various HA solutions, considering both infrastructure and operational expenses?

- Community and Support:

- What level of community support and documentation is available for the chosen HA approach?

- Is commercial support from PostgreSQL vendors or third-party providers an option?

Streaming Replication using PostgreSQL and Docker

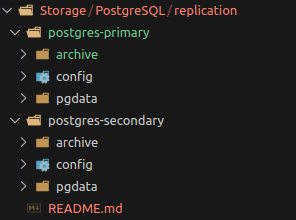

In this section, I will demonstrate how to implement streaming replication with manual failover using Docker. If you would like to follow along, you can download the config and walkthrough files for this post from GitHub. To make replication work, we need two postgres instances; postgres-1, the primary instance and postgres-2, a secondary instance. We’ll create these two using the same postgres docker image. My folder structure looks like this:

I have the config files saved in the storage/databases/postgresql/replication path. The archive directory is where postgres will save backups. Config files go into the config directory and the database files into pgdata. postgres-primary contains the primary server’s configuration.

Setting up a primary PostgreSQL server

Before setting up the primary and secondary servers, you’ll want to:

- Setup data volumes for each server

- Setup unique config files for each instance

- Create and run docker containers in the same network

Steps

Create a new docker network to allow instances to talk with each other

docker network create postgres

Configure primary instance

Create a file called postgresql.conf in the file path created above and add the following configuration to it. This configuration configures the port postgreSQL will listen on, enables replication and sets other necessary information.

# postgresql.conf data_directory = '/data' hba_file = '/config/pg_hba.conf' ident_file = '/config/pg_ident.conf' port = 5432 listen_addresses = '*' # Makes the server listen on all interfaces max_connections = 100 # Max concurrent connections shared_buffers = 128MB dynamic_shared_memory_type = posix max_wal_size = 1GB min_wal_size = 80MB log_timezone = 'Etc/UTC' datestyle = 'iso, mdy' timezone = 'Etc/UTC' # locale settings lc_messages = 'en_US.utf8' # locale for system error message lc_monetary = 'en_US.utf8' # locale for monetary formatting lc_numeric = 'en_US.utf8' # locale for number formatting lc_time = 'en_US.utf8' # locale for time formatting default_text_search_config = 'pg_catalog.english' # replication # allows connection from replication or standby servers. Determines the level of information written to the WAL. Setting it to replica writes enough data to support WAL archiving and replication wal_level = replica archive_mode = on # allows archiving of WAL files usinge archive_command. Completed WAL files are sent to archive storage archive_command = 'test ! -f /mnt/server/archive/%f && cp %p /mnt/server/archive/%f' # This sets the command to archive a WAL file. The %f represents the filename and the %p represents the file path name max_wal_senders = 3 # number of concurrent connections from standby servers i.e backup clients. Abrupt disconnection of a streaming client might leave an orphaned connection slot behind until a timeout is reaches, so this param should eb set slightly higer than the maximum number of expected clients so disconnected clients can immediately reconnect.

Postgres does not accept a transaction until it has been written to the transaction log and saved to disk. If there is ever a system crash, the database can be recovered from the transaction log. We enable archiving of the WAL files by setting the wal_level and archive_mode settings.

Next, ensure you’re in the storage/databases/postgresql/replication and start the primary database container instance:

cd storage/databases/postgresql/replication docker run -it --rm --name postgres-1 \ -- network postgres \ -e POSTGRES_USER=postgresadmin \ -e POSTGRES_PASSWORD=BgqzYc4lM7OypUh \ -e POSTGRES_DB=postgresdb \ -e PGDATA= "/data" \ -v $PWD/postgres-primary/pgdata:/data \ -v $PWD/postgres-primary/config:/config \ -v $PWD/postgres-primary/archive:/archive \ -p 5000:5432 \ postgres:15 -c 'config_file=/config/postgresql.conf

This command starts a new docker container named postgres-1 which will be the primary postgres instance and creates a new database called postgresdb. It also configures postgres with a data directory, mounts data volumes, maps ports and specifies the config file to use. Next, we’ll create a replication user, enable replication and backup the database to one of the volumes we created.

Create a Replication User

Create a new user account with replication permission to take backups of the database.

Login to postgres-1:

docker exec -it postgres-1 bash # Create a new user createuser -U postgresadmin -P -c 5 --replication replicationUser exit

Let’s break down the command:

createuser: This is a command-line utility that comes with PostgreSQL. It’s used to create a new PostgreSQL user (also known as a role).-U postgresadmin: The-Uflag is used to specify the name of the existing PostgreSQL user that will create the new user. In this case,postgresadminis the existing user.-P: This flag prompts for the password of the new user. You’ll be asked to enter this password twice for confirmation.-c 5: The-cflag is used to set the maximum number of concurrent connections that the new user can make to the database. In this case, the new user will be able to make up to 5 concurrent connections.--replication: This flag gives the new user the ability to initiate streaming replication and backup. Streaming replication allows a database to maintain a copy of data on a remote server.replicationUser: This is the name of the new user that will be created.

So, in summary, this command creates a new PostgreSQL user named replicationUser with the ability to make up to 5 concurrent connections and initiate streaming replication. The new user’s password will be prompted for input. The command is executed by an existing PostgreSQL user named postgresadmin.

Take a base backup

In the previous steps, we created a database. Now, we’ll backup the database and copy it to the secondary container’s data volume(we haven’t started this container yet).

Use pg_backup utility. The utility is in the docker postgresql image so we can run it without running a db by changing the entrypoint command.

Mount the blank data directory as we will make a new backup in there:

cd storage/databases/postgresql/replication

docker run -it --rm \

--network postgres \

-v ${PWD}/postgres-secondary/pgdata:/data \

--entrypoint /bin/bash postgres:15

Take the backup by logging into postgres-1 with replicationUser and writing the backup to /data

pg_basebackup -h postgres-1 -p 5432 -U replicationUser -D /data/ -Fp -Xs -R

The pg_basebackup command takes a plain format base backup of the primary PostgreSQL server running on host postgres-1 and port 5432. The backup is performed by the user replicationUser, and the backup files are stored in the directory /data/. Additionally, the backup is configured for synchronous mode (-Xs), and it includes all the files needed to set up a standby server (-R). This backup is suitable for setting up a replication standby server.

You should now see PostgreSQL data ready for our instance in ${PWD}/postgres-secondary/pddata

Set up Standby PostgreSQL Instance

cd storage/databases/postgresql/replication

docker run -it --rm --name postgres-2 \

--net postgres \

-e POSTGRES_USER=postgresadmin \

-e POSTGRES_PASSWORD=BgqzYc4lM7OypUh\

-e POSTGRES_DB=postgresdb \

-e PGDATA="/data" \

-v ${PWD}/postgres-secondary/pgdata:/data \

-v ${PWD}/postgres-secondary/config:/config \

-v ${PWD}/postgres-secondary/archive:/mnt/server/archive \

-p 5001:5432 \

postgres:15 -c 'config_file=/config/postgresql.conf'

and that you have standby.signal file in place (existence of this file will force postgres to run as slave or secondary server).

ls -la /var/lib/postgresql/15/main/ | grep standby

Test the replication

Test the replication by creating a new table in postgres-1, the primary instance

# Login to postgres psql --username=postgresadmin postgresdb # Create table CREATE TABLE customers (firstname text, customer_id serial, date_created timestamp); # Show the table \dt # Show that there are no records postgresdb=# select * from customers; │ firstname | customer_id | date_created │-----------+-------------+-------------- │(0 rows) # Confirm no records in secondary

Create a record in the primary database

INSERT INTO customers (firstname, date_created) VALUES ('Vuyisile', CURRENT_TIMESTAMP);

SELECT * FROM customers;

postgresdb=# select * from customers;

│ firstname | customer_id | date_created

│-----------+-------------+----------------------------

│ Vuyisile | 1 | 2023-12-13 04:14:08.052152

│(1 row)

Login to postgres-2 secondary and view the table and data:

docker exec -it postgres-2 bash # Login to postgres psql --username=postgresadmin postgresdb # Show the tables \dt # Check for data in the table SELECT * FROM customers; postgresdb=# select * from customers; │ firstname | customer_id | date_created │-----------+-------------+---------------------------- │ Vuyisile | 1 | 2023-12-13 04:14:08.052152 │(1 row)

Failover

Postgres does not have built-in automatic failover, it requires 3rd party tooling to achieve. So If postgres-1 fails we use a utility called pg_ctl to promote the standby server to a primary server. After promoting the standby you could choose to setup a new standby server and configure replication to it or fail back to the original server once you have resolved the failure.

Let’s stop the primary server to simulate failure:

docker rm -f postgres-1 Then log into postgres-2 and promote it to primary: docker exec -it postgres-2 bash # confirm we cannot create a table as its a stand-by server CREATE TABLE customers (firstname text, customer_id serial, date_created timestamp); # run pg_ctl as postgres user (cannot be run as root!) runuser -u postgres -- pg_ctl promote # confirm we can create a table as its a primary server CREATE TABLE customers (firstname text, customer_id serial, date_created timestamp);

The standby file should be removed automatically

ls -la /var/lib/postgresql/15/main/ | grep standby

Conclusion

This post discussed approaches to achieving High Availability in PostgreSQL such as log shipping, streaming replication and cascading replication. It touched on the different tools you can use to automate monitoring, replication, failover and failback of postgres instances and listed ten questions you can ask to guide your decision making when choosing a solution. In the last section, you saw how to implement streaming replication with manual failover using Postgres and Docker.

Additional reading: